Using GPUs with Virtual Machines on vSphere

🎯 Purpose of the Article

This article introduces the foundational concepts and options for integrating GPU acceleration into VMware vSphere environments, particularly for machine learning (ML) and compute-intensive workloads.

As organizations increasingly adopt machine learning (ML), artificial intelligence (AI), and data-intensive workloads, the demand for GPU-accelerated computing has surged. If you’re a vSphere administrator or infrastructure architect, you’ve likely been asked by data scientists, ML engineers, or developers to provide GPU-capable virtual machines (VMs). This article kicks off a series that explores how VMware vSphere supports GPU compute workloads, starting with an overview of the available options and considerations. b

🧠 Key Concepts

- GPU Compute: Refers to using GPUs in VMs for general-purpose computing, not just graphics.

- Near Bare-Metal Performance: With the right setup, GPU performance in VMs can closely match that of physical servers.

Why GPUs Matter for ML Workloads

GPUs are essential for accelerating ML tasks such as training, inference, and development. These workloads involve massive matrix operations that GPUs handle far more efficiently than traditional CPUs. The result? Faster time to insights and improved productivity for your teams.

Beyond VDI: GPU Compute on vSphere

While GPUs have traditionally been associated with Virtual Desktop Infrastructure (VDI), vSphere supports a broader use case known as GPU Compute. This allows users to run GPU-accelerated applications in VMs—similar to how they would on bare metal or in public cloud environments.

VMware, in collaboration with technology partners like NVIDIA, offers flexible GPU consumption models that maximize infrastructure ROI while meeting diverse user needs.

Key Questions to Ask Before You Begin

To successfully deploy GPU-enabled VMs, you’ll need to gather information from both your end-users and your hardware/software vendors. Consider:

- What type of workloads will be run (e.g., ML training vs. inference)?

- What performance expectations do users have?

- What GPU hardware is available or needed?

- What licensing or software components are required?

Performance Expectations

GPU performance in vSphere VMs can approach near bare-metal levels, depending on the chosen technology. Future articles in this series will dive deeper into performance benchmarks and comparisons. For now, VMware’s performance engineering team has published initial results that demonstrate the viability of GPU compute in virtualized environments.

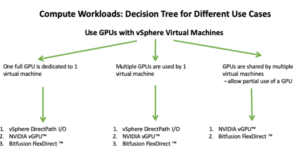

GPU Usage Models in vSphere

One of the first decisions you’ll face is how GPUs will be consumed in your environment. VMware supports multiple GPU usage models, each suited to different scenarios:

Common GPU Configuration Options:

| GPU Configuration | Use Case |

|---|---|

| Direct Pass-Through (vDGA) | High-performance, single-user workloads |

| Shared GPU (vGPU) | Multi-user environments, flexible resource allocation |

| Virtual Compute Server (vCS) | ML/AI workloads with compute-focused GPU sharing |

These configurations are enabled through technologies like NVIDIA vGPU, formerly known as NVIDIA GRID. The vGPU family includes products such as Virtual Compute Server (vCS) and Virtual Data Science Workstation (vDWS), each tailored to specific workload types.

🚀 Why Use GPUs in vSphere?

- Speed: GPUs accelerate ML tasks like training and inference by handling large matrix operations faster than CPUs.

- Demand: Data scientists and developers increasingly require GPU-powered environments for AI, ML, and data analytics.

- Flexibility: vSphere supports GPU usage beyond traditional VDI (Virtual Desktop Infrastructure), enabling broader compute use cases.

🛠️ Deployment Options

VMware offers multiple GPU deployment models, each suited to different needs:

| Use Case | Technology | Description |

|---|---|---|

| Dedicated GPU | DirectPath I/O | Assigns a full GPU to a single VM. Best for high-performance needs. |

| Shared GPU | NVIDIA vGPU | Allows multiple VMs to share a single GPU. Ideal for cost-efficiency and flexibility. |

| Networked GPU | Bitfusion | Enables GPU access over the network, decoupling GPU from physical servers. |

These options are supported through partnerships with vendors like NVIDIA, offering tools like NVIDIA Virtual Compute Server (vCS) and vDWS.

📈 Performance Considerations

- Performance varies by technology.

- DirectPath I/O offers the highest performance but lacks flexibility.

- NVIDIA vGPU balances performance with scalability.

- Bitfusion enables dynamic GPU allocation across the data center.

🧭 Decision-Making Guidance

System administrators should:

- Understand user needs (e.g., training vs. inference).

- Evaluate hardware/software compatibility.

- Choose the GPU model that aligns with performance, flexibility, and cost goals.

VMDirectPath I/O (Passthrough Mode)

In the first part of this series, we introduced the concept of GPU Compute on vSphere and outlined the various methods available for enabling GPU acceleration in virtual machines. In this second installment, we focus on VMDirectPath I/O, also known as passthrough mode, which allows a virtual machine to directly access a physical GPU device.

What is VMDirectPath I/O?

VMDirectPath I/O enables a GPU to be directly assigned to a VM, bypassing the ESXi hypervisor. This direct access delivers near-native performance, typically within 4–5% of bare-metal speeds. It’s an ideal starting point for organizations transitioning from physical workstations to virtualized environments for GPU workloads.

Key Benefits of Passthrough Mode:

- High performance: Minimal overhead due to direct device access.

- Simplicity: No third-party drivers required in the ESXi hypervisor.

- Dedicated resources: Each GPU is assigned to a single VM, ensuring full utilization.

- Cloud replication: Mimics public cloud GPU instances in a private cloud setup.

Limitations:

- No GPU sharing between VMs.

- vSphere features like vMotion, DRS, and Snapshots are not supported.

- Requires careful hardware and firmware configuration.

Step-by-Step Setup Guide

1. Host Server Configuration

1.1 Check GPU Compatibility

Ensure your GPU is supported by your server vendor and can operate in passthrough mode. Most modern GPUs support this.

1.2 BIOS Settings

If your GPU maps more than 16GB of memory, enable the BIOS setting for:

- “Above 4G decoding”

- “Memory mapped I/O above 4GB”

- “PCI 64-bit resource handling above 4G”

This setting is typically found in the PCI section of the BIOS. Consult your vendor documentation for exact terminology.

1.3 Enable GPU for Passthrough in vSphere

Use the vSphere Client to enable the GPU for DirectPath I/O:

- Navigate to:

Configure → Hardware → PCI Devices → Edit - Check the box next to your GPU device.

- Click OK and reboot the host server.

After reboot:

- Go to:

Configure → Hardware → PCI Devices - Confirm the GPU appears under “DirectPath I/O PCI Devices Available to VMs”.

2. Virtual Machine Configuration

2.1 Create the VM

Create a new VM as usual, but pay special attention to the boot firmware setting.

2.2 Enable EFI/UEFI Boot Mode

- Navigate to:

Edit Settings → VM Options → Boot Options - Set Firmware to EFI or UEFI.

This is required for proper GPU passthrough functionality.

2.3 Adjust Memory Mapped I/O Settings (if needed)

If your GPU maps more than 16GB of memory (e.g., NVIDIA Tesla P100), you’ll need to configure additional VM parameters.

Steps:

- Consult your GPU vendor’s documentation for PCI BAR memory requirements.

- If unsure, enable passthrough and attempt to boot the VM.

- If the boot fails, check the VM’s

vmware.logfor memory mapping errors.

Use Cases for VMDirectPath I/O

- Single-user ML training or inference

- GPU-intensive development environments

- Private cloud replication of public cloud GPU instances

This method is best suited for scenarios where dedicated GPU access is required and VM mobility features are not critical.

2.3 Configuring Memory Mapped I/O for High-End GPUs

Some GPUs, like the NVIDIA Tesla P100 or V100, require large memory mappings (>16GB). These mappings are defined in the PCI Base Address Registers (BARs). If your GPU falls into this category, you’ll need to configure two advanced VM parameters:

Steps in vSphere Client:

- Navigate to:

Edit Settings → VM Options → Advanced → Configuration Parameters → Edit Configuration - Add the following parameters:

- use64bitMMIO: Enables 64-bit memory mapping.

- 64bitMMIOSizeGB: Set based on the number of high-end GPUs assigned to the VM.

How to Calculate MMIO Size:

- Multiply the number of GPUs by 16GB

- Round up to the next power of two

Example:

- 2 GPUs → 2 × 16 = 32 → Round up to 64

- 1 NVIDIA V100 (32GB BAR) → Round up to 64

This ensures the VM can properly map the GPU’s memory regions.

2.4 Installing the Guest Operating System

Install an EFI/UEFI-capable OS (e.g., modern Linux or Windows versions). This is essential for GPUs requiring large MMIO regions.

Once the OS is installed, install the vendor-specific GPU driver inside the guest OS.

2.5 Assigning the GPU to the VM

Before assigning the GPU:

- Power off the VM.

Then:

- In the vSphere Client, go to:

Edit Settings → Add New Device → PCI Device - Select the GPU from the list.

- Confirm it appears in the VM’s hardware list (e.g., “PCI Device 0”).

2.6 Reserving VM Memory

To ensure stability and performance:

- Set a memory reservation equal to the VM’s total configured memory.

- Navigate to:

Edit Settings → Virtual Hardware → Memory → Reservation

This guarantees that the VM has exclusive access to the required memory.

2.7 Verifying GPU Access

After powering on the VM:

- Linux: Run

- Windows: Open Device Manager and check under Display Adapters

If the GPU appears, it’s ready for use in passthrough mode.

3. Advanced Scenarios

3.1 Multiple VMs Using Passthrough

To deploy multiple VMs:

- Clone a base VM before assigning PCI devices.

- Assign a dedicated GPU to each VM individually.

3.2 Multiple GPUs in One VM

You can assign multiple GPUs to a single VM using the same steps. Just ensure:

- MMIO size is calculated correctly.

- Each GPU is added via the PCI Device menu.

This setup is ideal for large-scale ML training or GPU-intensive simulations.

Summary

VMDirectPath I/O is a powerful method for delivering near-native GPU performance in vSphere. While it comes with limitations (no vMotion, DRS, or snapshots), it’s perfect for dedicated workloads and private cloud replication of GPU-enabled environments.

In the next article, we’ll explore shared GPU models like NVIDIA vGPU, which offer more flexibility and scalability for multi-user environments.

Installing NVIDIA Virtual GPU Technology

In this third installment of our series, we explore the NVIDIA vGPU technology—formerly known as NVIDIA GRID—and how it enables flexible, high-performance GPU usage in VMware vSphere environments. This article focuses on compute workloads such as machine learning, deep learning, and high-performance computing (HPC), rather than virtual desktop infrastructure (VDI).

What is NVIDIA vGPU?

NVIDIA vGPU allows virtual machines to share GPU resources or dedicate full GPUs, depending on workload requirements. It’s ideal for environments where:

- Applications don’t require full GPU power.

- GPU resources are limited and need to be shared.

- Flexibility is needed between full and partial GPU allocation.

Key Products in the NVIDIA vGPU Family:

- NVIDIA Virtual Compute Server (vCS) – Recommended for ML/AI workloads.

- NVIDIA Quadro Virtual DataCenter Workstation (vDWS) – Previously used for compute workloads, now more focused on graphics.

Architecture Overview

NVIDIA vGPU consists of two main components:

- NVIDIA Virtual GPU Manager – Installed as a VIB (VMware Installation Bundle) on the ESXi hypervisor.

- Guest OS vGPU Driver – Installed inside the virtual machine.

This setup enables GPU virtualization and management across vSphere environments.

1. Setting Up NVIDIA vGPU on the vSphere Host

1.1 Configure GPU for vGPU Mode

Access the ESXi host via SSH or the shell and run:

Alternatively, use the vSphere Client:

- Navigate to:

Configure → Hardware → Graphics → Host Graphics → Edit

Reboot the host after changing the setting.

1.2 Verify Host Graphics Settings

Run: # esxcli graphics host get

Default Graphics Type: SharedPassthru

Shared Passthru Assignment Policy: Performance

1.3 Install NVIDIA vGPU Manager VIB

Before installation:

- Place the host in maintenance mode: esxcli system maintenanceMode set –enable true

- Install the VIB (adjust path as needed): esxcli software vib install -v /vmfs/volumes/<datastore>/NVIDIA/NVIDIA-VMware_ESXi_6.7_Host_Driver_<version>.vi

Message: Operation finished successfully.

Reboot Required: false

VIBs Installed: NVIDIA_bootbank_<driver_name>

- Exit maintenance mode: esxcli system maintenanceMode set –enable false

1.4 Verify VIB Installation

Run: sxcli software vib list | grep -i NVIDIA

This confirms the NVIDIA driver is installed.

1.5 Confirm GPU Operation

Run: nvidia-smi

This displays GPU status, usage, and vGPU profiles.

1.6 Check GPU Virtualization Mode

Run: nvidia-smi -q | grep -i virtualization

Virtualization mode: Host VGPU

1.7 Disabling ECC (Pre-vGPU 9.0 Only)

If you’re using NVIDIA vGPU software prior to release 9.0, you must disable ECC (Error Correcting Code) on supported GPUs (e.g., Tesla V100, P100, P40, M6, M60), as ECC is not supported in those versions.

- Disable ECC on ESXi: nvidia-smi -e 0

- Verify ECC Status: nvidia-smi -q

Expected output:

ECC Mode

Current : Disabled

Pending : Disabled

Note: ECC is supported in NVIDIA vGPU 9.0 and later, so this step can be skipped if you’re using a newer release.

2. Choosing the vGPU Profile for a VM

Once the vGPU Manager is installed and operational, you can assign a vGPU profile to your virtual machine. Profiles define how much GPU memory and compute capability a VM can access.

Profile Selection in vSphere Client:

- Right-click the VM →

Edit Settings - Go to

Virtual Hardwaretab - Use the GPU Profile dropdown to select a profile

Profile Naming Convention:

- Example:

grid_P100-8q→ Allocates 8GB of GPU memory - Example:

grid_P100-16q→ Allocates full 16GB, dedicating the GPU to one VM

With vCS (Virtual Compute Server) on vSphere 6.7 Update 3 or later, you can assign multiple vGPU profiles to a single VM, enabling access to multiple physical GPUs.

3. Installing the Guest OS vGPU Driver

Ensure the driver version matches the vGPU Manager version installed on ESXi.

3.1 Install Developer Tools (Ubuntu Example):

apt update

apt upgrade

sudo apt install build-essential

3.2 Prepare the NVIDIA vGPU Driver:

- Download the

.runfile from NVIDIA’s site (e.g.,NVIDIA-Linux-x86_64-390.42-grid.run) - Copy it to the VM

3.3 Exit X-Windows Server:

- Ubuntu:sudo service lightdm stop

- CentOS/RHEL:sudo init 3

3.4 Install the Driver:

chmod +x NVIDIA-Linux-x86_64-390.42-grid.run

sudo sh ./NVIDIA-Linux-x86_64-390.42-grid.run

Verify installation: nvidia-smi

3.5 Apply NVIDIA License

Edit the license config file: sudo nano /etc/nvidia/gridd.conf

Add:

ServerAddress=10.1.2.3

FeatureType=1

Restart the service: sudo service nvidia-gridd restart

Verify licensing:sudo grep grid /var/log/messages

Look for messages confirming license acquisition.

4. Installing and Testing CUDA Libraries

4.1 Using Containers (Recommended):

Use NVIDIA Docker and pre-built containers for CUDA and ML frameworks. This simplifies version management and avoids manual dependency resolution.

Refer to VMware’s guide:

“Enabling Machine Learning as a Service with GPU Acceleration”

4.2 Manual Installation (Optional):

You can manually install CUDA libraries and frameworks if containerization is not preferred. Ensure compatibility with the vGPU driver and guest OS.

4.2 Manual Installation of CUDA Libraries

4.2.1 Download the CUDA Packages

Ensure compatibility between the CUDA version and the NVIDIA vGPU driver installed in your guest OS. For example, CUDA 9.1 is compatible with the 390 series of NVIDIA drivers.

Use wget or a browser to download the following packages:

⚠️ Always verify compatibility with your driver and OS version before downloading.

4.2.2 Install the CUDA Libraries

Run the installer: sudo sh cuda_9.1.85_387.26_linux

- Accept the EULA

- When prompted to install the driver, choose No

- Say Yes to installing libraries, symbolic links, and samples

4.3 Testing CUDA Functionality

Run Sample CUDA Programs

After installation, compile and run the sample programs:

- deviceQuery – Verifies GPU detection and basic functionality

- vectorAdd – Tests GPU compute capability

Successful execution confirms that the GPU is correctly configured and ready for ML workloads.